5 Causes of Cloud Cost swelling

We live in a world where the famous Nathan Rothschild’s “who owns the data owns the future” was embased by humans too literally. And they became practically obsessed with generating a bazillion amounts of data daily. Don’t get me wrong, I think Mr. Rothschild is right, and information drives the evolution, but let us focus on the insane data volumes that must be collected and stored correctly. After all, in the Arcserve Data Attack Surface report, Cybersecurity Ventures predicts that we will have 200 ZB of data by 2025. And 60% of this data is stored in the cloud, Statista claims.

Year after year, shared, private, and hybrid cloud solutions capture an increasing share of the IT infrastructure market from on-premise solutions, highlighting cloud spending as a significant concern for companies. According to Flexera, 81% of responders name cloud costs a painful matter, regretting that more than 30% of their cloud spending is thrown down the drain. So what causes cloud cost ballooning? In this work, we will pay much attention to this matter.

5 causes of Cloud Cost swelling

1. The complexity of Cloud Pricing and lack of Cloud Visibility

Cloud service pricing is complex. Does this statement sound familiar? Of course, it does. You experience this headache monthly when you get a cloud bill that can be 50 pages long. Now, it’s impossible to calculate the cost of infrastructure services manually. Thus, get ready to forget about using spreadsheets because of these three reasons:

- Cost forecasting is challenging

- Service actual price might differ from the listed on a cloud provider’s website

- Cloud non-experts gain painful and time-consuming experiences while estimating cloud costs. This job is for cloud professionals like DevOps and SREs (Site Reliability Engineers)

You will find it difficult to control and protect what you cannot see. Why do so many organizations do very little to provide complete visibility and control over their multi-account environments? What if infrastructures are multi-cloud?

DevOps engineers have used a combination of monitoring solutions from Grfana Labs (Grafana and Prometheus) for visualization and data storage for eight years. And while leveraging these brings quite an effective visualization to your cloud, setting up and maintaining these continuous monitoring pipelines requires significant time and involvement from DevOps experts.

2. Over-provisioning

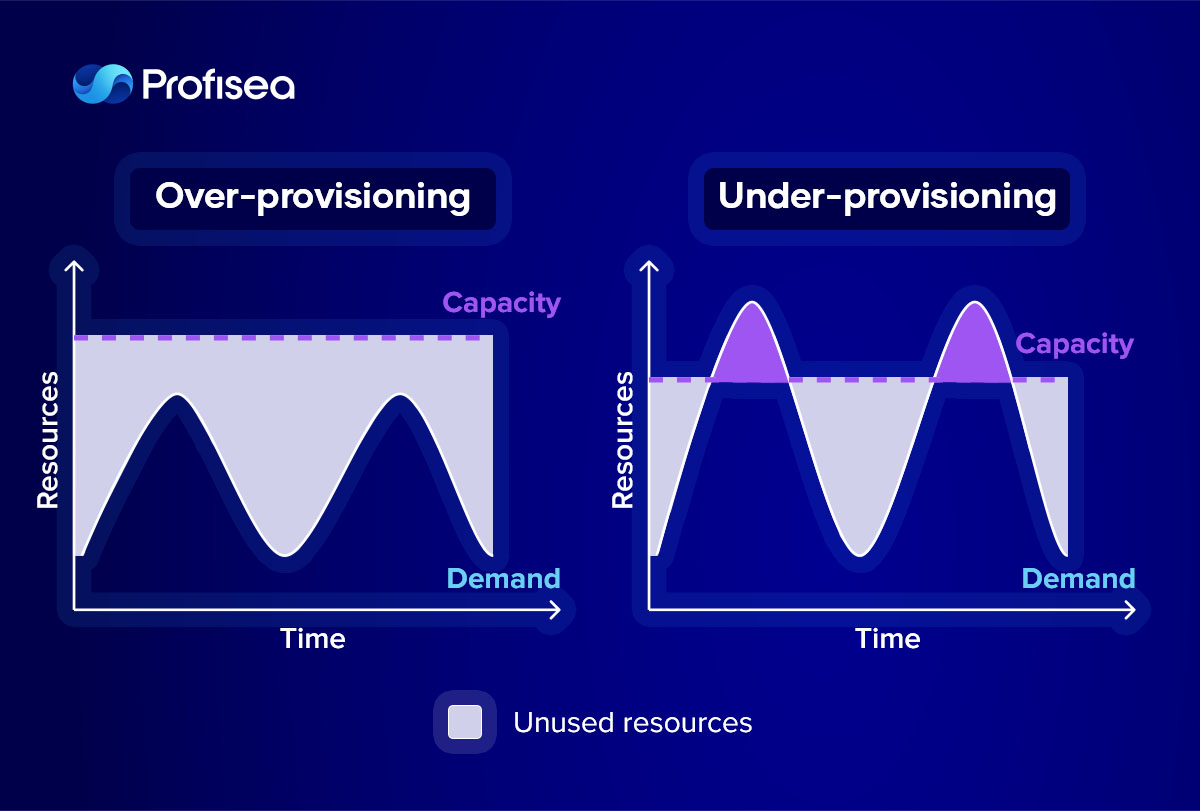

Any cloud service you buy (at the lowest possible price) should match the capacity and performance of your workloads by size and type. Additionally, it’s essential to test deployed instances for opportunities to remove or collapse them without harming the business’s health. That’s how the cloud costs are saved. However, while rightsizing is a crucial mechanism for optimizing cloud spending, organizations often neglect the onboarding process when moving to the cloud. Quite often, IT leaders are looking to “lift and move” their infrastructure, deferring the size of instances for the future.

In addition, many IT managers, out of fear of under-provisioning function by the rule – the more is, the better, recruiting many large-scale services even for small capacities. Hasty decisions, where speed and expected successful performance are prioritized over cost, leave organizations overwhelmed with missized services and large amounts of unused resources.

3. Idle resources

What if unused resources did accumulate? Like any other waste, cloud waste piles up when:

- Resources are unmanaged

- Resources are too large or too many of them

- Resources are lost

- Saving options are overseen

- Regular health checks and cleaning are not performed

Therefore, we can see, analyze and detect unmanaged and lost resources by setting up the correct visualization and visibility in the cloud environment with the help of modern tools and platforms. What to do when cloud waste is discovered? The solution can be either DevOps experts provide regular cleaning manually or use a platform that helps visualize the cloud infrastructure and manages cloud junk right there, with 1-2 clicks of the mouse.

4. Resources function 24/7

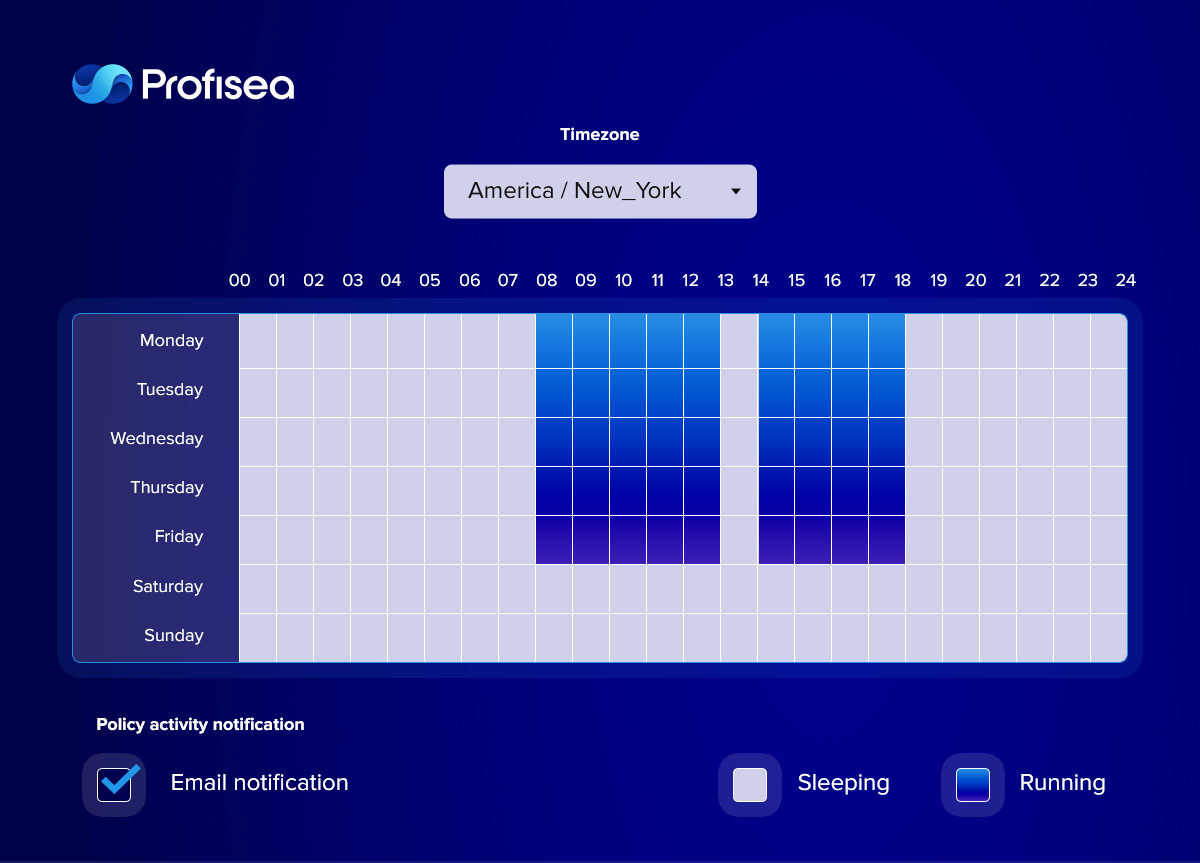

CSPs (Cloud Service Providers) promote a pay-as-you-go pricing model, which means that around 50% of any organization’s resources, away from production processes such as development or quality control, do not require running solutions 24/7.

Top CSPs help users involve Instance scheduling in their cloud cost optimization strategy, Offering cloud-native tools like AWS Instance Scheduler, Google Cloud Scheduler, and Azure Logic Apps (formerly Azure Scheduler).

Although these solutions appear simple to deploy, some hidden complexities can arise in their implementation, configuration, and maintenance. Multiply these challenges with the complex environment of an organization with lots of accounts and isolated teams. And we get a confusing picture of scheduling constraints that only professional cloud experts armed with state-of-art technology and practices can address.

5. Neglecting pricing opportunities like Spotting, RI’s, and Saving Plans

Considering all the benefits, it is an excellent idea to design an IT environment on clouds. Yet, I’d be a billionaire if I had a nickel whenever customers say it’s expensive to run workloads on AWS, GCP, or Microsoft Azure. However, it doesn’t have to be costly if you know about saving options like Reserved Instances (RIs), Saving Plans, and Spots.

- Reserved Instances are a financial commitment to book low-priced capacity for one or three years

- Saving Plans is an alternative to RIs, when you take a specific volume of resources for a certain period, and no one cares how you use them

- Spots happen when someone buys resources and doesn’t make 100% use of them. We can repurchase them at a crazy discount of up to 90% and reuse them. Such resources are temporary because they can be taken after a 30-120 second notification

When deciding which savings model is best for you, gather and analyze information about your needs, develop a detailed plan, and make sure you have all the solutions and tools you need to implement that plan properly. Or you can always shake hands with experienced DevOps engineers who will architect your organization’s balanced cost optimization strategy.

Bottom line

The challenge of gaining visibility to control cloud costs and developing an optimal cost optimization strategy for your organization has led to the “FinOps” methodology rise. FinOps is “the evolving cloud financial management discipline that enables organizations to maximize business value by helping engineering, finance, technology, and business teams collaborate on data-based cost decisions.”

Profisea’s experienced Cloud FinOps experts will temporarily join your team to develop a well-architected FinOps strategy based on best FinOps practices that will drive your organization’s evolution, effectively handling cloud cost swelling.