Microservices Architecture: Deployment Know-How

For the past several months, we’ve been sorting out everything microservices-related from teams organization, user interface, and data storing to distributed and security concepts, API gateways, registries, and more. You already know how to apply the Microservice architecture to build a shipment application as a set of services. Now it’s time to wrap up and cross the finish line by digging into the patterns of the deployment process.

As we’ve mentioned, microservices are the stand-alone, independently developed and scalable artifacts. To provide the proper level of their performance and availability, you have to deploy them as a series of multiple instances. Meaning, isolating services from one another and choosing the appropriate deployment pattern.

5 Things to Remember Before Deploying

- You’d want to simplify your app’s deployment process while maintaining its cost-effectiveness;

- In most cases, your team is going to write the services in different languages and frameworks;

- Your services will have numerous versions; still, the deployment of each instance should be reliable, quick, easy;

- You’d want to be able to scale or limit the hardware resources used by services;

- You’re going to track each instance’s behavior, so the monitoring process should also be efficient.

How to Package Microservices?

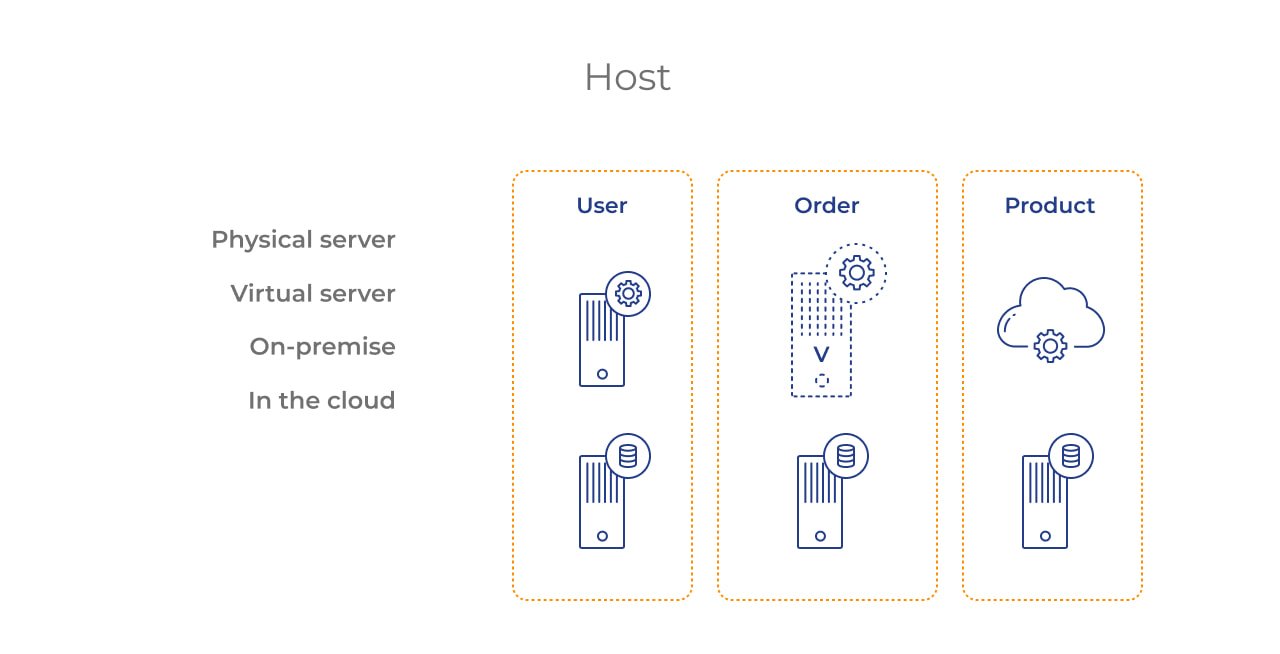

Overall, you have 2 ways of running your sets of instances – on physical servers or virtual machines, on-premise or in the cloud.

Each approach in detail:

- Physical servers have their own memory capacity, processing algorithms, network, and data storage.

- Virtual machines (VM) lend you the same physical server with established physical capacity, but, in turn, give you virtual CPU, memory, and network, therefore, empowering you to set limits for the resources consumed by your services.

Also, there is one more trick when you want to simplify and automate the deployment process. It’s to present each service as a container image and run it using special digital tools for container management.

4 Microservices Deployment Patterns

When you’ve decided whether to use hardware or cloud servers, you can now follow one of these patterns. To choose, consider the software and hardware capacities you need, the forecasted load on your app, and the 5 things to remember we’ve listed above.

1st – Single Microservice Instance per One Host or VM

As it says, yes, deploy each particular instance on its own host or VM. This pattern allows isolating microservice instances from one another, and reduce the resources consumption by each instance to the threshold of a single host or VM.

In the case of virtual infrastructure, you’d have to package the whole service as a VM image and deploy the instances as separate machines. As an example, Netflix experts package their services as Amazon Machine Images, using EC2 for deploying the instances.

Also, this approach excludes the conflict of resources and dependency versions. The instances’ management, monitoring, and redeployment are easy and straightforward.

2nd – Multiple Instances per One Host/VM

If needed, you can run a few instances of several separate services on a single host or VM. The tools like Tomcat, Jetty, or web apps/OSGi bundles can help with this pattern. Potentially, it’s a more beneficial solution compared to 1st one thanks to the highly efficient resource utilization.

However, you shouldn’t forget about ensuring that your services and dependency versions do not get into a conflict at the end of the day. Also, it’ll be challenging to coordinate and limit the system resources assigned to the instances.

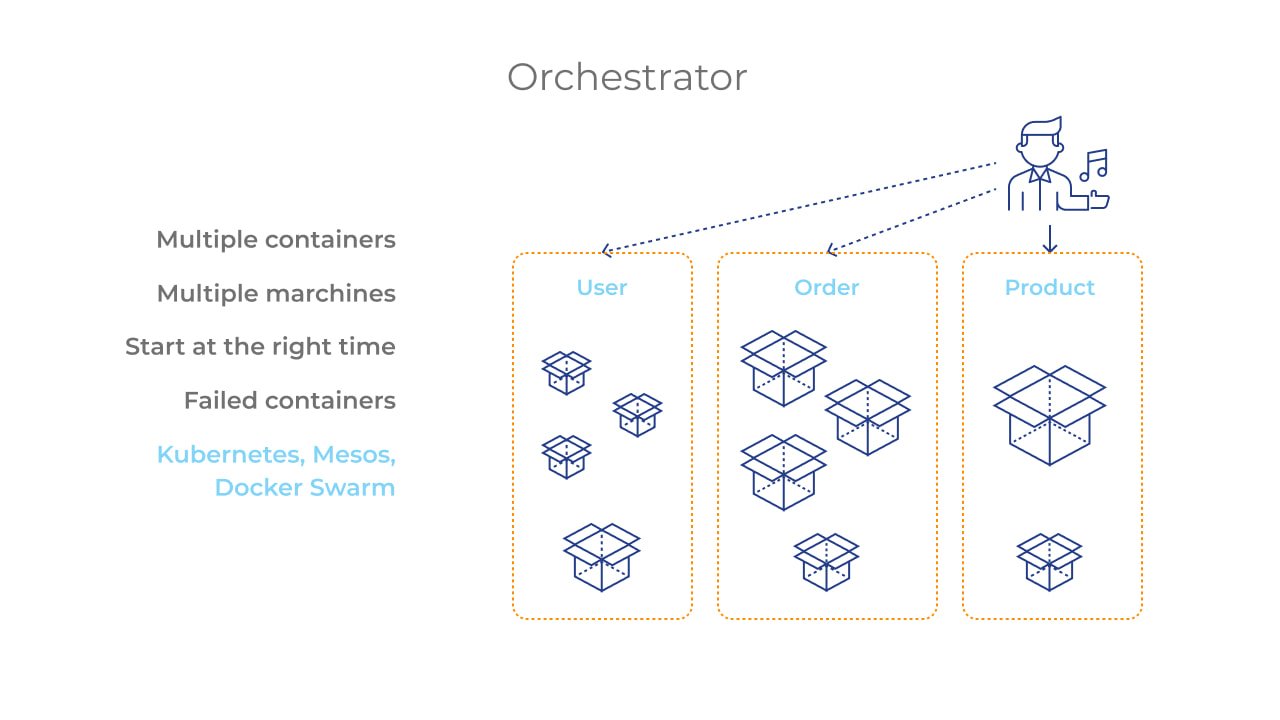

3rd – One Instance per One Container (The Art of Orchestration)

When your app’s architecture gets too complicated, you risk getting lost in a packaging process with all its dependencies and system’s capacity parameters. Here, as we’ve said earlier, the containerization method comes handy. The containers capture and save all technology specifics that you used during each service development. As a result, you get an image that contains all the right dependencies while isolating the instances. It boosts the consistency level, so you can now launch and stop your services in precisely the same way.

When you deploy instances as containers, it’s easier to scale up and down the service itself. You just need to manage the number of container instances. With this pattern, you also have full control over the limits of CPU and memory usage. It’s a way faster solution for running the microservices through all development stages to testing and production.

However, you’d face the need to orchestrate all your containers that run across multiple VMs. This means handling such challenges as:

- finding out how to start the right container at the right time;

- handling storage process and system resources usage;

- establishing the way they can communicate with each other;

- dealing with failed containers or hardware, and so on.

Fortunately, the modern advancement of technology presents you with the digital orchestrators that allow automating all these tasks and reduce time and efforts usually spent on manual operational tasks. The most popular container management and orchestration system is Docker, though there are other nice alternatives from Amazon, IBM, Kubernetes, Azure, and Google.

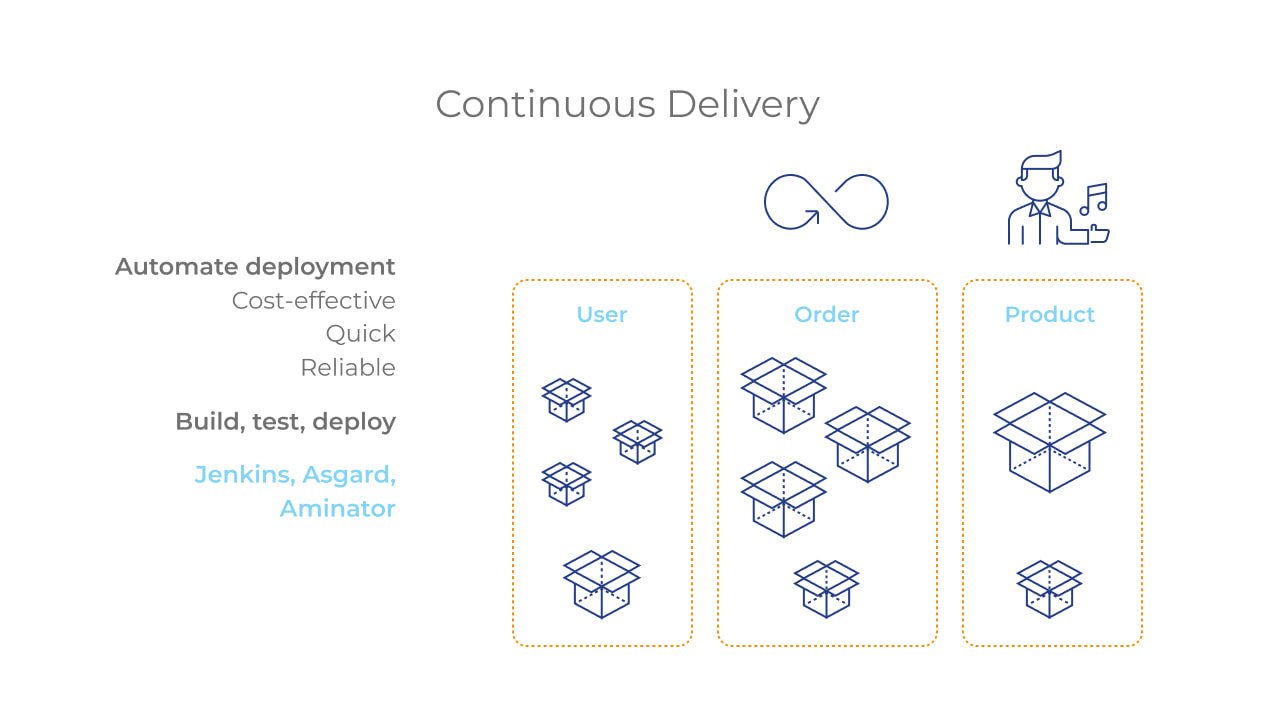

Continuous Delivery (Your Best Friend)

It’s not the deployment pattern itself, but it’s what you should aim for to achieve the highest level of robustness for your product development cycle from deployment into production. Continuous Delivery is a DevOps practice that streamlines code building, testing, version control, and delivering with automated tools. These tools package the ready code into a container, then ping the orchestrator to deploy all the pieces of your architecture. Repeated testing of your software, processes, and application ecosystem before deployment to production lets you discover most errors early on and reduce their impact.

If you follow the 3rd pattern and every element of your microservices architecture presented as a container, Continuous Delivery (CD) allows you to automate the entire deployment process. The most frequently recommended CD tools would be Jenkins, Buddy, Jira, and Netflix’s Asgard or Aminator. Also, AWS, Azure, and IBM offer high-quality pipeline management instruments.

4th – Serverless Deployment Environments

One of the most commonly used patterns these days is to choose a serverless, automated deployment platform provided by a public cloud vendor. Most known providers of such environments are AWS Lambda, Azure Functions, and Google Cloud Functions. Their utilities come with all needed instruments that create a service abstraction via a set of highly available instances.

Such an infrastructure relieves you of the need to personally operate and manage the pre-allocated resources (physical or virtual servers, hosts, containers). Everything is going to be done for you on the pay as you go basis – you pay only for the vendor’s resources you actually used while deploying a service.

To deploy microservices via serverless environments:

- package the service’s code (ZIP file or else);

- upload it to the chosen platform’s infrastructure;

- state the desired performance characteristics;

- the platform receives the code and processes it only when triggered to do so;

- then, the FaaS cloud computing service automatically runs and scales your code to handle the load.

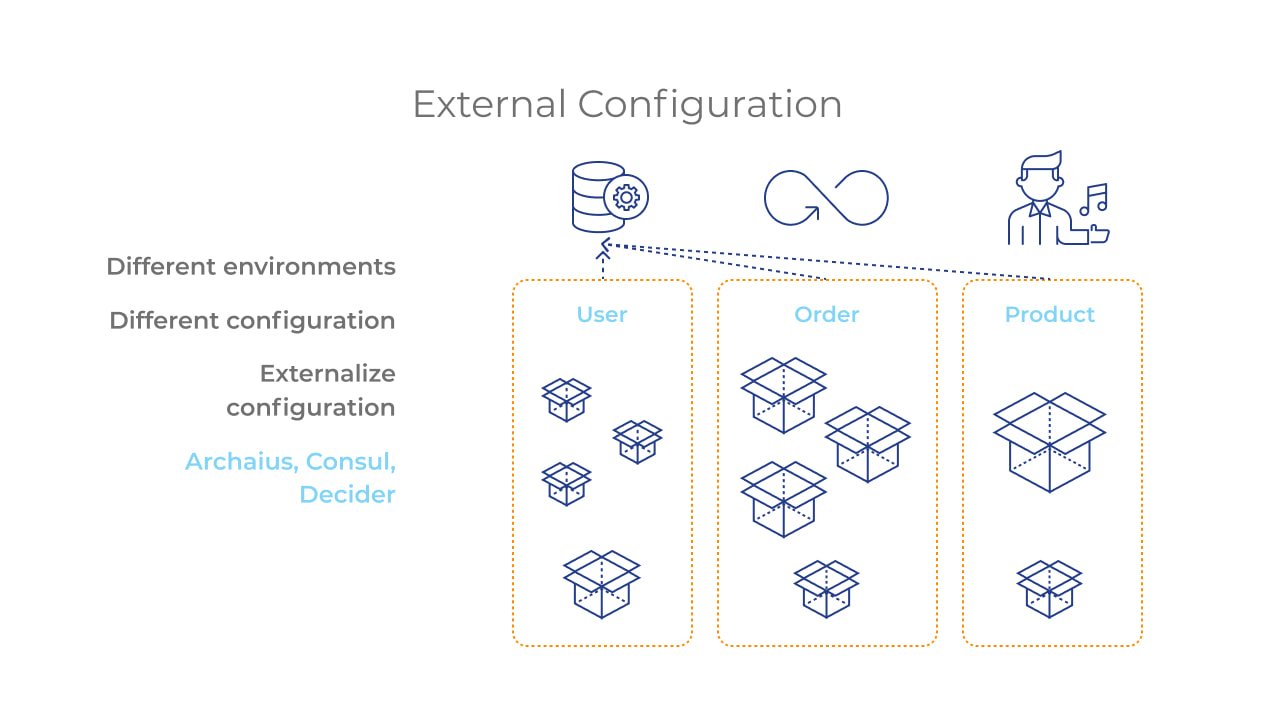

Generally, some of your microservices will be running on several environments, which means different runtime configuration for the same particular service. Therefore, when you consider developing a microservices-based application, remember to take into account the possible need to externalize each service code into a centralized digital configuration store (Consul, Decider, etc.) that would simplify its future deployment.

Summing Up

As you see, deploying microservices can be tricky. However, instruments like containers, orchestrators, and continuous delivery pipelines greatly help to overcome the complexity of any architecture. They automate and streamline not just development, QA, and version control, but also the deployment environment.

Being DevOps professionals, we’re fully proficient with these tools and always ready to share our expertise to benefit your project. Reach out, let’s see how we can help your business goals.